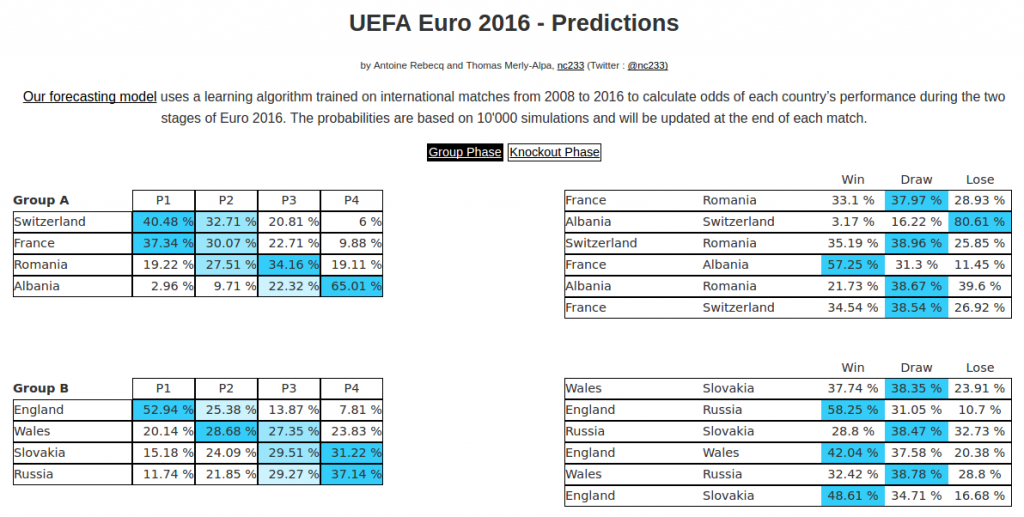

Today I found via FiveThirtyEight a riddle about arithmetic progressions: it’s called “Dodging 9s”. The question is to find the longest arithmetic progression (which means a collection of numbers in which each number is equal to the precedent plus some constant number (called the common difference), for instance 4 7 10 13 16) in which there is no 9 in any number. The repartition of the integers which contain a 9 is shown in the following figure, where the first row is 1 to 100, the second 101 to 200 and the last 9 901 to 10 000.

This repartition leads me to think that the progression must be built around the idea that it should avoid the black areas by making a “jump” over the portion of the integers which contains a 9. For instance, the progression 8 32 56 80 104 128 152 176 200 224 248 272 avoids the 90s and the 190s (but not the 290s, sadly). In order to determine the best progression, I will use R to calculate the length of the acceptable progression for multiple starting points and common difference. The following functions use regex in order to compute the length of the longest acceptable progression with these parameters:

nine 0) {return(1)}

else {return(0)}

}

progression_length

Using this function on values bewteen 1 and 10 000 for the starting point and the common difference of the progression allows us to determine the maximum length achievable (within these parameters!). It appears that the longest one is the progression starting at 1 with a common difference of 125, which is :

1 126 251 376 501 626 751 876 1001 1126 1251 1376 1501 1626 1751 1876 2001 2126 2251 2376 2501 2626 2751 2876 3001 3126 3251 3376 3501 3626 3751 3876 4001 4126 4251 4376 4501 4626 4751 4876 5001 5126 5251 5376 5501 5626 5751 5876 6001 6126 6251 6376 6501 6626 6751 6876 7001 7126 7251 7376 7501 7626 7751 7876 8001 8126 8251 8376 8501 8626 8751 8876

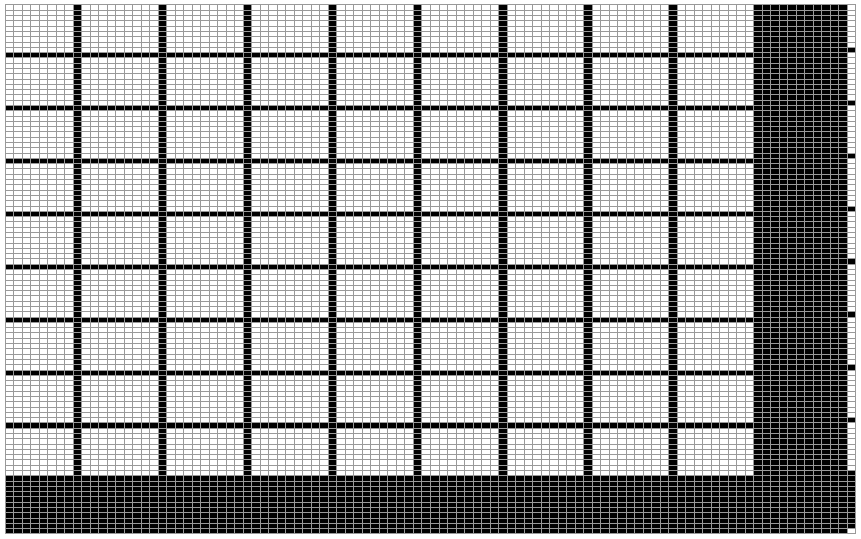

and which is represented in the following graph by the grey tiles:

Adding one more term to the progression leads to 9001, which obviously contains a 9. This little exploration is of course no proof of any maximum length, but it shows that my hypothesis of some “jumps” over the 9 areas wasn’t wrong!

![[Geekery] Dodging 9s](https://nc233.com/wp-content/uploads/2016/07/9_playing_cards-825x510.jpg)

![[Sports] What the splines model for UEFA Euro 2016 got right and wrong](https://nc233.com/wp-content/uploads/2016/07/800px-Argentine_-_Portugal_-_Cristiano_Ronaldo-800x510.jpg)